The AI Revolution in AEC

Robert K. Otani, PE

Chief Technology Officer at Thornton Tomasetti

July 31, 2024

Rob Otani examines “the new electricity” and its applications.

The Wave Is Upon Us

Artificial Intelligence (AI) has powered its way into the world’s minds in the last two years, largely due to the emergence of ChatGPT and Large Language Model (LLM) technologies. Its simplicity of use for all levels of professionals and its overwhelming capability to learn from millions of reports, texts, images and other media and generate well-written responses has gotten our attention.

While AI has been reshaping industries and daily life for many years in companies like Google and Amazon, its latest LLM and foundation model technologies are now developing new and more powerful capabilities at an unprecedented pace. This poses significant risks and opportunities. From health care to finance, from production staff to executives, AI’s swift integration has revolutionized processes, enhanced decision-making, spurred innovation and created new markets, marking a transformative leap in technological advancement and societal impact on businesses at large. In similar fashion, the architecture, engineering and construction (AEC) industry is equally ripe to be transformed by AI. The question is: Can we harness the power of an apparent new utility? One that transcends “basic” services such as power, light, water and the internet?

We believe we can. At Thornton Tomasetti’s CORE studio, the firm’s R&D technology incubator has been deploying a team of technologists, software developers and data scientists using our own dedicated AI team called CORE.AI, to research how to best leverage AI technologies to automate processes and scale knowledge transfer to accelerate our company’s business.

Diving In

My journey into leveraging AI and machine learning (ML) ML for structural engineering was sparked by a curiosity about AI/ML in 2014 when “Big Data” was a buzzword at all tech conferences. How we might untangle the Big Data “hairball” was a frequent topic in those days. By accessing and sorting it, perhaps we could find the “stars” in its midst. But there was only one way to find out.

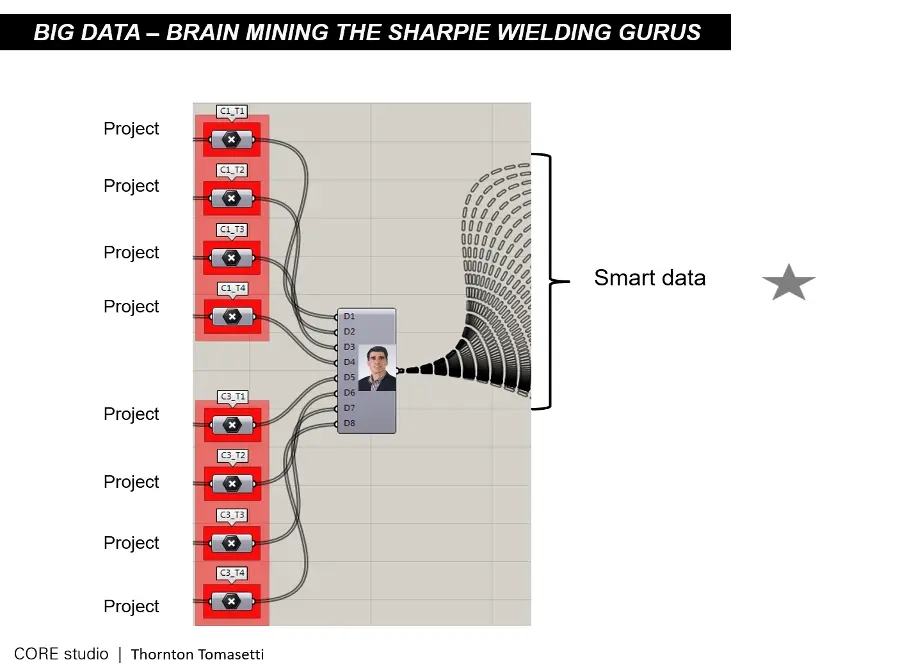

Despite AI’s great promise, it wasn’t yet clear to me how big data was going to help our engineering business until I started to research and understand how AI/ML works myself. Ten years ago, at AECTech 2014 (Thornton Tomasetti CORE studio’s annual technology symposium), I gave a presentation entitled “Symbiosis.” It shared my speculative view about how “Big Data” could add significant value to business enterprises, based on having the capability to filter big data and extract the “intelligent” aspects of our consulting practice from the corpus of past and present deliverables (e.g., technical reports, drawings and Enterprise Resource Planning/Customer Relationship Management data). Being able to store and share that intelligent information with everyone at the firm at the right times in a project offered great potential, I posited. The symbiotic aspect of this premise and its potential is that harnessing intelligent data from experienced engineers would offer a means of knowledge-sharing with young engineers. Moreover, the global shared intelligence of the firm would grow exponentially with every generation, and the process — and its virtuous, self-reinforcing cycle — would continue. After all, engineering consultancies are valued based on our intelligence and quality. Drawings and reports are only byproducts.

What I know in retrospect is that I was conjecturing about a data-crunching tool that could learn from those smart bits of filtered data and store them in an application to enable scalable access by the entire firm.

Welcome to the age of AI/ML.

Image of a Data Hairball in AECO (2014)

Image of the Intelligent Aspects of a Project’s Data (2014)

Image of Our Then CEO Illustrating the Parsing of Knowledge (2014)

The Power of Learning

We can now see that AI’s ability to process and “learn” from vast amounts of data quickly, accurately and computationally in minutes or hours with similar intelligence (accumulated knowledge) as a person who learns over many years has the power to transform AEC business practices. How buildings and infrastructure are designed, analyzed, constructed and maintained will be reimagined by AI processes and, at the least, will accelerate early design phases by a minimum of 20-30%. Beyond making these phases faster, they will simultaneously enable better quality by using AI-powered, synchronized, multidisciplinary design predictions with connected dependencies.

Lessons Learned from AI/ML R&D: Asterisk 1.0

In our early investigations, CORE studio’s AI/ML R&D team started developing machine learning models for structural member sizing and developed one of the first, if not the first, fully automated ML-powered building design web applications called Asterisk (Asterisk (thorntontomasetti.com), which was released in 2018. Asterisk predicts structural member sizing for steel and concrete high-rise buildings with approximately 90% design “building code-level” accuracy in under 30 seconds. The equivalent manual task would take a team of engineers at least two weeks to complete the same level of design on a single option with 100% accuracy. Asterisk’s underlying concept was not to replace what a human does, but to leverage the automation of building ML “designs” to perform a multitude of schemes and provide the design architect and client with a multi-objective solutions array that weighed cost, embodied carbon and materiality.

Despite Asterisk’s groundbreaking nature by any standard, our engineers at Thornton Tomasetti were reluctant to adopt an ML-powered tool for several reasons including a lack of trust of the results (i.e., ML automations tend to be mysterious, black box operations) and, in 2018, engineers were not prepared to accept a fully automated tool. However, with AI/ML’s current rising awareness in the industry, Asterisk has become a much desired and highly accepted tool.

In 2021, Asterisk’s concrete column machine learning model was integrated into Testfit (TestFit: Real Estate Feasibility Platform), and in 2023 the second generation of ML models were integrated into Skema.AI (Skema), an AI program similar in nature to Asterisk.

AI/ML Models with Speed and Accuracy: Asterisk 2.0

While the first series of structural ML models in Asterisk 1.0 were effective, their accuracy relative to code-based designs needed improvement. It also took a bit too long to execute them. Asterisk’s ML models were created by generating a “synthetic” dataset, by computing millions of optimized solutions with numerous independent variables using encoded structural code-based algorithms or by using a commercially available finite element program and running analyses in batch runs using a Cartesian product of sets. The combination of non-optimized methods our team originally used, as well as the limited computing power of early CPUs and GPUs made the creation of structural ML models a slow process — on occasion lasting months.

But over the last three years, we have been optimizing our end-to-end process and can now train most structural ML models in less than a week. In some promising studies, we can train multi-parameterized models using reinforcement learning in an hour. Data generation and training optimization is a prioritized goal for economy and model tuning, as well as supporting our firm’s goal of carbon footprint reduction. As a benefit, our target ML model accuracy is 95% or better for all our ML models. This means with minor tuning, models will almost always predict design options that exceed minimum building code requirements, and that design parity is achieved when studying various materiality or system options (i.e., cases in which qualitative optioneering is only possible when accuracy among all possible design options is similar). For engineers, accuracy level is paramount from a safety standpoint as well as for enabling trust in our ML models’ predictions.

Enterprise-level chatbots using LLMs will now be standard protocol for access to expertise from past and present sources.

Scaling Enterprise AI: CORTEX-AI.IO

An equally powerful optimization of end-to-end ML applications CORE studio has recently developed is our MLOps (Machine Learning Operations) platform called CORTEX-AI.IO. Asterisk 1.0 had all the ML models locked into the web application code base. This meant that modifying the logic or changing or adding ML models to the web application were arduous coding processes that would take weeks or months depending on whether any of the inner logic or dependencies within the application needed modification.

According to the website Databricks, “MLOps is a core function of machine learning engineering, focused on streamlining the process of taking machine learning models to production and then maintaining and monitoring them.”1 CORTEX-AI.IO stores datasets, experiments (trained models with tuned hyperparameters and algorithms) and allows a simple deployment of a trained model to a REST API to be used by an external web application. In lay terms, CORTEX is essentially an app store for ML models at scale.

Internally, we can now link a multitude of ML models (i.e., steel beam framing, columns, braced frames, foundations, etc.) to be logically connected with dependencies to create a complete ML building configurator, similar to Asterisk 1.0, or simply to serve as a single micro app for any of our singular ML structural framing member models. The flexibility of this ecosystem using CORTEX.AI allows us to configure our ML structural apps to nearly any level of complexity, building typology, structural materiality, wind or seismic zone, building height and combination thereof.

Business Enterprise and Large Language Models

In 2022, the world — in particular, the business world — changed when OpenAI’s ChatGPT was released and went viral. To have an almost real-time chat with the entirety of the internet’s data and subsequent “intelligence” is mind-blowing, considering the best information search tools we had until that time were search engines like Google, which only found links to content as opposed to advanced text generation and responses in well-written paragraphs. In my opinion, ChatGPT is orders of magnitude better than Google, even when functioning only as a search engine.

Serendipitously, one of our data scientists was working on an R&D project to train an AI model to understand and learn from our company’s intranet data with years of technical discussions and company news. But when ChatGPT and it’s corresponding LLM API were released, the R&D project changed radically. Using a technique called retrieval-augmented generation (RAG), which employs a pretrained LLM with an external data source (in this case, the text was our internal intranet data), our CORE.AI team was able to prototype a custom GPT pipeline we called “TTGPT.” TTGPT successfully found and contextualized years of firm knowledge in seconds with a simple prompt.

What this effectively meant was that an engineer could have a conversation with an AI that could read thousands or millions of documents in real time. More specifically, this allowed years of institutional knowledge to be accessed by everyone at the firm almost instantaneously. From a business standpoint, this kind of access is a critical aspect of our existence. AEC firms’ “secret sauce” is our collective knowledge learned over many years. Traditionally, it had been passed on through direct, interpersonal interactions, but those traditional knowledge-transfer interactions are not scalable in a multi-office and multi-continent firm. Using LLMs and accessing smart data sources, they are. Enterprise-level chatbots using LLMs will now be standard protocol for access to expertise from past and present sources.

AI/ML, Robotic Process Automation and Augmented AI/ML

Robotic process automation (RPA), also known as software robotics, uses intelligent automation technologies to perform the repetitive office tasks of human workers, such as extracting data, filling in forms, moving files and more.2 RPA is not new, but our team has been creating RPA applications by combining multiple manual, repeated processes into a single process using AI. Engineering tasks, many of which are rule-based, are prime applications for RPA. CORE.AI’s current truss design tool is at least 100 times faster than the best engineer. By our latest validations and tests, CORE.AI is also more precise by using a combination of finite element model simulations (FEM), ML models to predict truss member sizes, encoded optimization routines normally done manually by a person and non-ML code checks for validation. Of course, the design of a truss is only one structural element in a building and may only need to be used occasionally by an engineer. Yet, over time, it will save thousands of person-hours. Even better, since it has been rigorously validated and has an independent, physics-based, self-checking mechanism for quality control, it doesn’t make mistakes without informing engineers when it fails! CORE.AI’s venture in the RPA space using AI to automate and/or augment multiple manual processes with precision is the future of engineering and AI.

Structural Health Monitoring and Digital Twins

Structural health monitoring (SHM) in combination with digital twin technology that leverages AI’s power is on the verge of reducing the overall building and infrastructure costs, improving the overall health of our world’s infrastructure and automating how our infrastructure is maintained and prioritized. AI now has the capability to learn from past data, such as years of inspection reports and real-time data from IoT sensors and drones. This allows prioritized and predictive maintenance actions at scale without relying on a multitude of disparate and incomplete resources. Our CORE.AI team is researching AI methods to digitize and correlate past reports and findings with physical assets such as buildings and bridges. Next, we layer on sensory data to allow performance predictions and automated capital expenditure prioritizations based on structural health. Automating those processes and adding real-time intelligence to our aging infrastructure will save costs and extend the lives of our urban assets.

AI/ML Customization and De-Risking Tasks

Of course, any automated software tool like ChatGPT (just as non-AI tools like Excel) can make mistakes from hallucinations or formulaic errors. In both situations, it is difficult to decipher how and when mistakes are made. But in ChatGPT’s case, it is always advisable to find a non-AI generated source to double-check its writing. In the case of Excel, it is a norm at engineering firms to always double-check an Excel-generated result independently. At Thornton Tomasetti, we have developed an “AI Guiding Principles” document for the entire staff to put forth a best-practices guide on how to use and how not to use AI. Over time, this document will need to adapt to the ever-changing AI landscape and complexity. In the meantime, every firm needs to educate their staff about the power and risks of AI. Our CORE.AI team has been customizing our AI apps to be self-checking through non-AI methods and recommending that AI/ML be used only during early design phases, which will serve to de-risk AI.

Future Learning

As AI/ML automates low-level tasks, many of my colleagues and I have legitimate concerns that young engineers will not learn key engineering insights like the older generation did by solving problems manually many times over many years. Instead, an AI/ML tool will complete those low-level tasks automatically. I believe, as younger generations of engineers have grown up in the digital/automated age, they will learn significantly faster than my generation did by playing with those same AI/ML apps, just as they did with video games or by having conversations with those same AI/ML applications directly or through an AI agent. Digital learning has improved significantly as a response to the COVID era. Through my own experience with online resources, certain training aspects would not even be possible without the vast access of digital resources now available. In the near future, every AEC employee will have a smart AI-generated copilot and mentor to provide resources and advice when needed.

It seems we have found our new utility: The power of artificial intelligence is in our hands.

The Road Ahead

AI/ML will transform all businesses functions of AEC from technical tasks and administrative functions to accounting, finance and marketing. Multitudes of robots will work in the field in construction with certainty. This is why Dr. Andrew Ng, cofounder of Google Brain, says, “AI is the New Electricity.” It seems we have found our new utility: The power of artificial intelligence is in our hands. It is paramount that all AEC firms begin to leverage AI because it now has the capacity to automate routine tasks and is already transforming how knowledge is shared.

In the future, firms that leverage AI will reap significant gains in bottom-line performance and will discover new business lines that will grow their firms and ensure they are lasting and resilient for generations to come.

Footnotes:

1 “MLOps,” Databricks, https://www.databricks.com/glossary/mlops

(accessed June 12, 2024).

2 IBM.com

Robert K. Otani, PE, is chief technology officer at Thornton Tomasetti, Inc., a 1700+ person, multidisciplinary engineering and applied science firm, and founded the CORE studio, a digital design, application development, AI and R&D group at his firm. He has 30 years of structural design experience involving commercial, infrastructure, institutional, cultural and residential structures on projects totaling over $3 billion USD of construction and has led numerous software applications, including Konstru, Design Explorer, Beacon and Asterisk, the first ML-powered structural engineering application in the industry.